A Security & Privacy Focused Phone with a Secure Supply Chain

The Liberty Phone retains the software security and privacy features of the Librem 5 while adding a transparent, secure supply chain with manufacturing in the USA. The Liberty Phone also has 4GB of memory and 128GB built-in storage.

Starting at $1,999

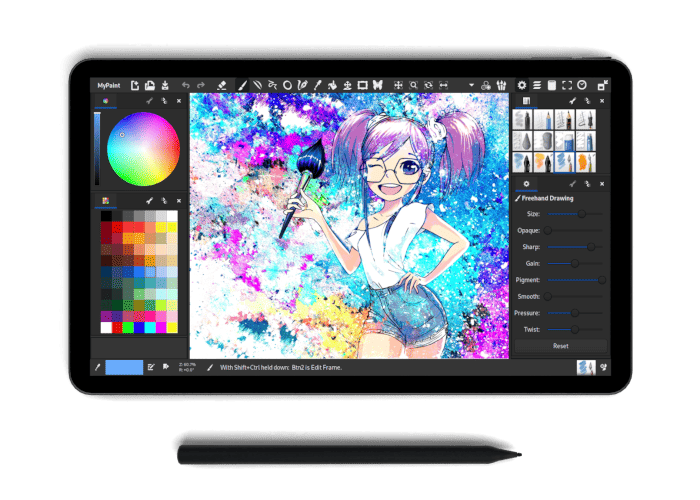

A Powerful Tablet with Freedom in Mind

Powerful 4 cores, tablet with AMOLED display, 4096 pressure levels pen and a detachable keyboard. It will let you express your creativity anywhere, anytime.

Shipping with PureBoot (Coreboot +Heads) and PureOS, you make sure that the Librem 11 is fully yours and is respecting your Privacy, Security and Freedom by default.

Starting at $999

A Security & Privacy Focused Phone

The Librem 5 is the original Linux kernel based phone produced by Purism with 3GB of memory and 32GB of storage.

Starting at $799.

Privacy-focused cellular plan for the Librem 5 and other unlocked phones.

Starting at $39/month

In her book Cyberselfish: A Critical Romp Through the Terribly Libertarian Culture of Silicon Valley, published in 2000, Borsook who is based in Palo Alto, California and has previously written for Wired and a host of other industry publications, took aim at what she saw as disturbing trends among the tech industry.

With our sights set on the beta release milestone, one key component still remains: a way to upgrade from Byzantium to Crimson. If you're a Linux expert, you might already know how Debian handles release upgrades. Some eager individuals have already upgraded from Byzantium to the Crimson alpha this way. However, we need an easy, graphical upgrade procedure, so everyone with a Librem 5 can get the improvements coming in Crimson.

NBC News reports that Trump Mobile customers have been waiting months for a promised ‘Made in the USA’ smartphone, originally announced for August delivery. The T1 phone was marketed as domestically produced, but delays and vague updates have raised skepticism. References to ‘Made in the USA’ have been removed from the company’s site, and leaked images suggest the device resembles existing Chinese-made models. This situation underscores the complexity of building smartphones in America without established infrastructure.